NASA coding standards, defensive programming and reliability

A 11 minutes story written on Nov 2017 by Adrian B.G.

I am a software engineer, and I strive to learn something new every day. When I started doing some research on defensive programming I stumbled upon an extreme use scenario, a software built to run on other planets, far away from human interaction and I decided to share my findings.

This article is basically a text summary of more than 3 hours of video panels based on software written for NASA space exploration missions.

I am sorry about the length 🕮 of the article, the plan was to write 2 pages but the videos are full of information and the subject is so intriguing to me.

Disclaimer: ⚠️I just made a summary of the videos, I have no affiliation with NASA, JPL or the speakers.

Context ⚓

What would you do if you would receive the following project?

- should allow manual and autonomous car driving

- it has to gather data from many attached sensors

- it has to send the gathered data 54M km away

- it cannot be tested in the production environment

- it’s hard to apply hotfixes and patches after the release

- if something goes wrong with the software we will lose $2B and waste many years of work

- the entire OS and software should run on 10Mb of RAM

- you do not have physical access to the hardware after release

- the avg communication lag with the software is 15 minutes

The bottom line is that you must build a complex software that should run impeccably from the first run.

TL; TR 📰

If you have a few hours I suggest watching all 3 videos. If you have 10 minutes read the entire article, if you are in a hurry here’s a short summary:

- test, test, test ,test ,test ..and then build more automated tests

- monitor the runtime using code metrics and memory snapshots

- impose static analysis and code reviews

- treat the warnings as build errors

- treat the code that you cannot control very harshly (pragma, libraries etc)

- write your code as if it will be attacked and everything can fail

- triple check the input data before using it

- with higher risks you have to apply higher and more strict coding standards. A code that can affect human lives should be treated more harshly than an input form.

- If you can use a feature it doesn't mean you should, not all the programming languages features are good for your project.

- enable fast development cycles using all the methods you can find (starting by replicating the live env locally)

- test again on all layers

What is defensive programming? 🛡️

Skip this part if you are familiar with the concept. Being such a generic abstract term I will just quote Wikipedia and refrain from personal comments.

Defensive programming is a form of defensive design intended to ensure the continuing function of a piece of software under unforeseen circumstances. Defensive programming practices are often used where high availability, safety or security is needed.

Defensive programming is an approach to improve software and source code, in terms of:

- General quality — reducing the number of software bugs and problems.

- Making the source code comprehensible — the source code should be readable and understandable so it is approved in a code audit.

- Making the software behave in a predictable manner despite unexpected inputs or user actions.

Mars Rover 🌑

Gerard Holzmann, a senior research scientist at NASA’s Jet Propulsion Laboratory, describes creating the software for the Curiosity rover in this video accompaniment to “Mars Rover,” his article in the February 2014 Communications of the ACM.

Summary of the project: 4 million lines of code 🤓, split in over 100 modules. The code ran on over 120 threads on 1 x 133Mhz CPU 🖥(with a backup computer). It took over 5 years and over 40 software engineers. The scope of the project is limited: 1 customer and 1 time use. It has more C code than all the previous MARS missions combined.

The first problem they had: coding reviews and peer programming don’t scale, so they had to rely on automated analysis.

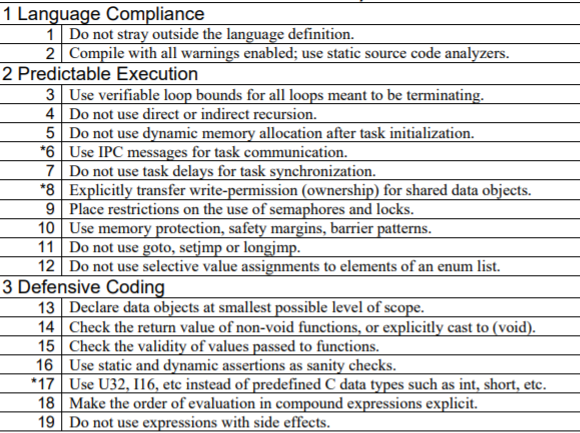

They needed new, improved Coding Standards that were based on risk management and could be enforced by tools. Based on previous experiences (mostly bad ones) they created 6 levels of compliance.

- LOC1 has only 2 rules: compilation without warnings from static code analyzers, and remain inside the language definitions.

- LOC2 is used to create predictable execution of the source code. The usage of recursion, dynamic memory allocation is restricted.

- LOC3 deals with defensive coding and LOC4 with code clarity.

All the code used for flight must comply with all 4 levels. Levels 5 and 6 of compliance are used for further quality reserved to code that can affect human life.

👓_You can read the specifics of LOC 1–4 here:_ JPL Institutional Coding C Standards.

Further processes and tools were developed to ensure higher standards:

- training and certification for developers and managers

- use of 5 different static code analyzers and all warnings must be fixed

- code review tool based process. Comments made by peers with 3 levels of importance were assigned to the module owners,acting as async code reviews.

- wall of shame the developers that had the most warnings

- nightly builds because … compile and analysis duration is 15h

- no external code was allowed (non C allowed like pragma)

- 100% coverage unit testing even for the defensive code (impossible scenarios)

- tests for logic verification on critical subsystems (race conditions, data corruptions and loss, deadlocks ..)

- use only mature tools and do not cut corners

They also tackled more abstract problems like testing ALL the possible cases of a function. An algorithm example: you have to build a linked list as a queue with 1 reader and 1 writer without a mutex lock. You can achieve this by always leaving at least 1 item in it, so you do not have to update 2 vars (head and tail) for 1 operation. The speaker also mentioned the “Data Independence theorem by Pierre Wolper” used to test concurrent algorithms.

You can watch the video here: Mars Code at CACM.

Mark Maimone “C++ on Mars: Incorporating C++ into Mars Rover Flight Software” 🛣

The previous video was about system modules and the project as a whole. This one is focused on the driving module, which was written in C++, unlike the rest of the project which uses C.

Besides the harsh environment (over 100 degrees daily changes 😎), the project has some draconic hardware limitations: 133MHz with 128mb of RAM only of which 32mb usable. All the OS (VXWork), operations and modules code fitted 10MB of RAM shared memory. There are over 100 modules that must communicate with each other through queue messages.

All the commands received from the ground controls are heavily verified: can the command be run, are all the resources available, do I have enough free resources to make new pictures, can I access all the components required to execute the command, is now the right time to run the command and so on.

10% of the Curiosity rover code belongs to the autonomous driving module. It handles all the images captured of the surroundings and does a lot of analysis on them: where am I now, am I slipping, is it safe to go that route and so on. It is built with C++ because it allowed a higher level of abstraction that enables a faster development.

🔨C++ was brought in the company in the year 2000. The speaker explains how hard it is to introduce new technologies. The main reasoning is why to change something that worked so well in the past — the C language. All the previous missions used it and any new technology adds new risks to the table, also everything must be retested.

The speaker was in charge of the 🚓Driving module of the rover. It has 2 drive methods: manual (humans take most of the decisions) and autonomous, which is slower. It uses many metrics to make decisions, most of them derived from the images taken by the 15 cameras attached to the vehicle. The system needs to stops every meter to take 4 sets of images and analyze them to search for new hazards. Also, all the image processing is done only when sitting still!

The rover has some mechanical constraints too: it cannot drive and steer at the same time, top speed is 100m/hour but it has a lot of torque (one wheel can pull half of the vehicle).

They adopted C++ in the end, but only for 1 module and without using all the features it has. When they tried to introduce it as a new language many concerns were gathered from the teams and decided to fix them by not using some features or limit their extent.

Exceptions ⚡ add too much uncertainty to the project and the risk of a missing to catch an exception is too high. Templates can add code bloats and the resources are very limited. IOStream is kind of useless when the console is … on another planet. Multiple inheritance raised many concerns and they didn’t have the need for it anyway. Operator overloading is confusing in big projects and teams, they allowed only the “new” and “delete” operators. Dynamic allocation can be problematic with limited resources, they used a dedicated memory pool and the Placement New technique (specifying where in memory to allocate the new space).

To fix the memory allocation concerns they decided to split the RAM memory and give a small part to the C++ module, this way if something goes wrong it doesn’t affect the other modules, which were critical to the mission.

You can watch the video on youtube.

Learnings

For monitoring purposes, any decision that the autonomous system takes a log is sent to the base with specific details, image analysis results and the memory snapshot to detect and trace back possible memory leaks and issues.

Besides the plethora of unit tests, they also had a dedicated team for testing the software on real rover replicas, out in the wild.

Local computer tests should be relevant, using the same compilation and memory allocation system. They had many layers of testings, including with some modules shut down, simulating losing one of the cameras for example.

Abstractization can help the developers build more “human” 👔 commands, but still letting the “machine” to decide on its own when and how to execute them, in an optimal and safe way.

Applying NASA coding standards to dynamic languages 🌊

A gaming company started to apply the GPL coding standards to JavaScript, here is one of their talks at JSConf 2017. They tried to translate some of the reasoning behind NASA coding standards into a wider, looser definition:

- NASA => JavaScript

- No function should be longer than a single sheet of paper 📝 => 1 function should do only 1 simple thing

- Only use simple control flows, no goto statements and recursion => write predictable code, follow coding standards and use static analysis

- Do not use dynamic memory allocation after initialization => Measure (benchmark) and compare (profile, memory snapshots) to detect possible memory leaks. Use object pooling, write clean code and use ESLint no-unused-vars.

- All loops ➰ must have a fixed set of the upper bound. => recommend not to follow this rule, we need flexibility and recursion.

- Assertion density should be at least 2 per function. => Minimal amount of tests is 2 per function, having a higher density is better of course. Watch for runtime anomalies.

- Data objects must be declared at the smallest possible level of scope. => No shared state.

- The return of functions must be checked by each calling function, and the validity of parameters must be checked inside each function. => we should skip it

- Use of preprocessor must be limited. => JavaScript is transpiled by each browser, so we must monitor the performance of our code.

- The use of pointers should be restricted. => Call chains and loose coupling should be used more often.

- Code must be compiled with warnings enabled. ☠️ **** => Keep the project green from the first day of dev. If the tests are failing: prioritize, refactor and add new tests.

Bottom line is harder to do defensive programming in JavaScript, you do not have type checks and cannot control the execution environment, so you will have to write a lot of code to compensate.

⚠️Disclaimer: this article is just a summary of the videos, it doesn’t mean I agree or not with their statements. I will write some personal posts about these topics but this is not one of them.

The morale: if we can “train” rocks (CPUs) with electricity to execute human instructions, on another planet, we can do anything!

Extra resources 📚

- Wikipedia

- Java and OOP intro into Defensive Programming: Best practices

- The art of Defensive Programming by Diego Mariani

- Defensive Programming vs Batshit Crazy Paranoid Programming

- Security with .NET web apps — Defensive Programming Niall Merrigan